Run.ai is a software platform designed to optimize and manage artificial intelligence (AI) workloads on Kubernetes clusters.

It offers features that streamline AI development and deployment processes while maximizing resource utilization.

Key Features:

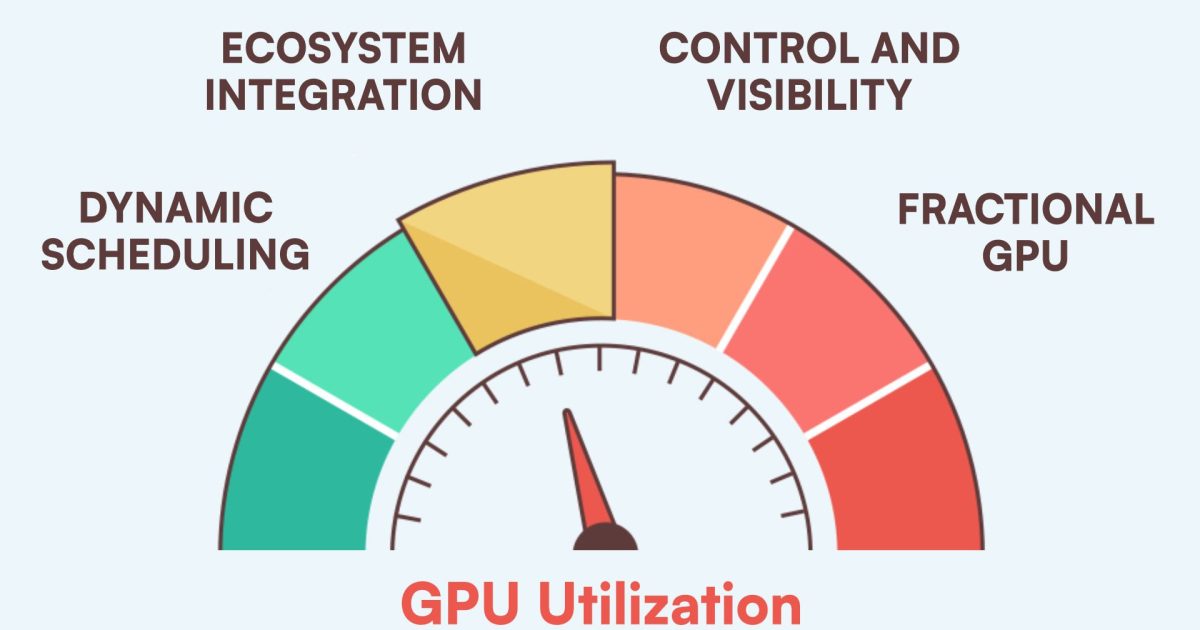

- GPU Fractionalization: Divides GPUs into smaller units to enable multiple containers to share a single GPU, increasing efficiency and reducing costs.

- Advanced Scheduling: Manages tasks in batches using multiple queues, allowing administrators to define rules, policies, and priorities for each queue to optimize resource allocation.

- Dynamic Resource Allocation: Assigns compute power to users automatically, ensuring jobs get the resources they need while maximizing cluster utilization.

- Monitoring and Visibility: Provides insights into cluster resources, workloads, and user activity, allowing administrators to track usage and plan for future capacity.

- Integration with Kubernetes: Works seamlessly with Kubernetes, leveraging its scalability and flexibility for managing AI workloads.

Use Cases:

- AI Model Development: Facilitates the development of AI models by providing the necessary resources and infrastructure for training and experimentation.

- Inference Workloads: Optimizes inference workloads by efficiently allocating resources and ensuring fast response times for AI-powered applications.

- Research Environments: Supports research environments by enabling scientists and researchers to run complex AI experiments at scale.

- Data Science Workflows: Streamlines data science workflows by simplifying the management of compute resources and enabling collaboration among teams.

- Enterprise AI Deployment: Accelerates the deployment of AI solutions in enterprise environments by providing a robust platform for managing AI workloads.

How Run.ai Works:

- Submit Job: Users submit AI jobs to the Run:ai platform, specifying the required resources (e.g., GPU, memory).

- Resource Allocation: Run:ai dynamically allocates resources from the cluster based on the job’s requirements and available capacity.

- Job Execution: The job runs on the allocated resources, and Run:ai manages the execution and monitoring of the job.

- Results Retrieval: Upon completion, the user retrieves the results of the job from the Run:ai platform.